It’s almost always difficult to decide — at the early stage — the marketing strategies that can achieve the best results.

After all, marketing can’t be based on intuition or guesswork. It takes data and concrete evidence to make decisions that move the needle.

A-B testing has proven to be one of the most effective strategies to find accurate and contextual data to help strategize.

At least, about 75% of websites with over one million monthly visitors carry out A/B testing.

This is because A/B testing is effective when crafting marketing strategies for digital assets such as display ads, websites, marketing emails, landing pages, social media campaigns, or others.

If you are a business owner striving to advance its growth, you will need A/B testing when creating your digital assets, publishing content, providing users with a smooth experience, and achieving your conversion goals.

But, how do you do that? This is where this article appears in the picture. Here, you will learn about the fundamentals of A/B Testing and how it can help improve the growth of your business.

What is AB Testing?

Also known as a split testing, AB testing is an online experiment carried out on a digital asset such as web pages, display ad, mobile application, or an email in order to collect data and determine which has the best statistical significance based on your goals and the test results you get.

Here is how AB testing works.

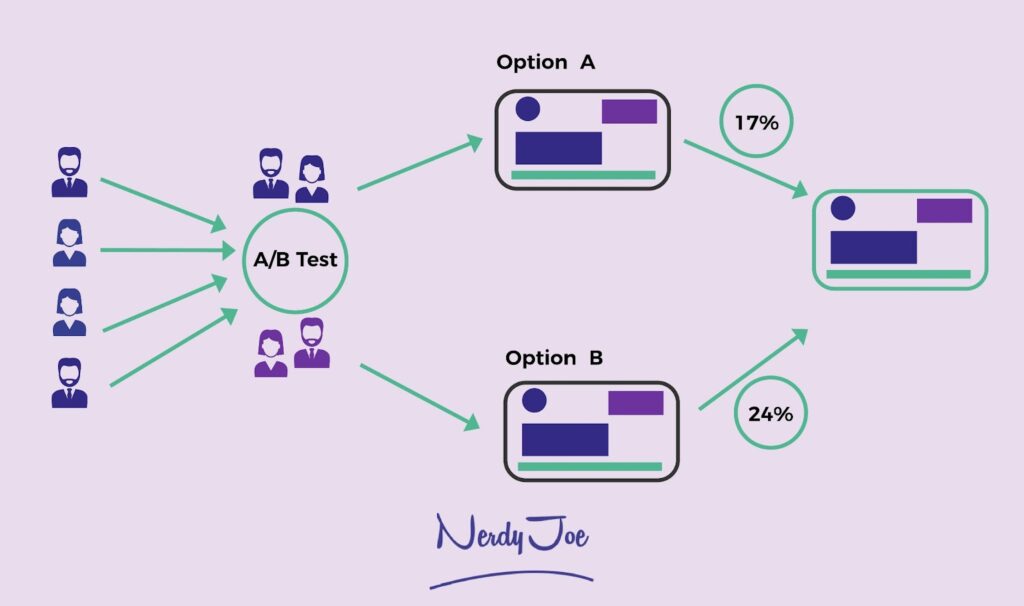

Two or more versions of the same digital asset be it landing pages, marketing emails, websites, or more are presented at random to a specific sample size of individuals in an audience.

The audience randomly engages with the different versions of the asset. Next, the marketer gathers the data and conducts a statistical analysis to determine which version better impacts the audience or helps achieve their goal.

What this process does is eliminate all sorts of uncertainties during the launch of a digital campaign and enable the marketers to make better decisions backed by statistical analysis.

The A in AB testing means the original or “control” testing variable. Meanwhile, the B means the variation of the original version.

The version that makes the most productive impact on your business is considered the best.

For instance, if you AB test tactics to boost your email opens, two versions of the same email — with different approaches to entice open — will be sent to different individuals of the same audience.

The email with the most open rate or clicks will be the best version.

Implementing the changes of the best variable drives positive impacts to your business such as increasing ROI, increasing the open rate, and more.

AB testing is an essential approach to improving the conversion rate as you collect both quantitative and qualitative data that enable you to make better decisions.

You can use the collected data to understand your customer’s pain points, engagement rate, and consumer behavior, and even gain insights into their experiences with new features.

Why is AB testing important for email marketers?

Before you start sending emails to your customers, it is essential to AB test your email irrespective of the campaign elements you used. Here are the reasons why.

It helps you with the needs of your customers

The end goal of any marketing campaign is to meet the needs of the target audience.

Even if you believe that your content is well-crafted and properly pertains to your target audience, there is still the fact that you are basing your decisions on guesses and what you think will.

What you think will work isn’t always what your customers or target audiences will love the most, it’s not always what will trigger an emotional response from them, it’s not what will get them to click or open your messages.

Take a website for example, it’s only guesses until you run an AB test to see what works for your visitors, what color drives their attention, what call to action (CTA) wording makes them click, what offer, what image, test power words, etc.

Do they prefer images or text? Do they prefer video or image? and more stuff.

Running an AB test campaign in this context is like asking your target audience for its opinion over what will best work for them.

It enables you to let your subscribers decide what they want for themselves, it enables you to provide emails tailored to fit their requirements and you get to achieve better results.

It helps you get accurate data for proper decision making

Although you can use your logic to determine how various campaign elements might influence potential customers, you can never ascertain the level of impact it will create.

Since there are various pieces of information on how different email marketing elements contribute to the campaign’s success, you may not select the most appropriate strategy that can contribute to its growth.

Besides, you could be biased in your opinions and unintentionally create content tailored to meet your needs, rather than your customers. Next, you may not select what is suitable and can drive the desired result for you.

A/B testing helps you find what works and what does not. When you A/B test emails, you collect concrete data and analysis that can enable you to make the most appropriate decision.

It boosts open and click through rates

Since A-B testing is about testing different variables, you can identify what appeals to your target audience with ease.

By knowing the preferences or trends of your audience, and their likes and dislikes, you can compose an email that will suit their needs. Doing this enables them to open your email when it gets into their inbox.

It is cost effective

AB testing enables you to have access to all the pieces of information after your marketing efforts.

As a result, you can discontinue the strategies that aren’t yielding many outcomes as you expected and focus on the productive ones.

Subsequently, you save yourself the time, resources, and stress of wasting your energy on a strategy that wouldn’t work for you.

Different Types of A/B Testing

Now that you have learned what A/B testing means and why it is important for email marketers, let’s take a look at the different types of A/B Testing. Ideally, three types of A/B testing exist. Let’s take a look at each of them.

Split URL Testing

Split test is a conversion rate optimization process where the optimizer or marketer tests and analyzes a completely new version of an existing web page to determine the one that works better.

This is different from the other AB testing types in that you don’t test versions of the same page. Instead, you test two different URLs. Here are some key benefits of this testing.

- It allows you to make significant changes to the existing website, most especially its design.

- It lets you track user behavior or user journey on two seperate landing pages and see what the test concludes. You can easily analyze data to see which yields the best conversion rate.

- It also splits the website visitors between the original web page and the variation.

- It measures the variable through various metrics such as conversion rates and more.

Multivariate Testing

Multivariate testing is an experimentation method that enables you to test variations of multiple page variables simultaneously and analyze the best variable out of all permutations.

It is more complex than the usual AB testing and suitable for products, advanced marketing, or development professionals.

Here, you can analyze multiple elements at the same time — headline of landing pages, call-to-action, banner images, or more — of each page to discover their contribution to the page and the winning variation.

When conducted appropriately, multivariate testing can help prevent you from running multiple or sequential A/B tests on a web page with the same goals.

Running subsequent tests with multiple variations saves you money, time, and effort, thereby enabling you to make fast decisions.

Multi-Page Testing

Multi-page testing is an AB testing method that enables you to test changes made to different elements on multiple pages.

Most times, there are two ways to do this. The first is funnel multi-page testing which allows you to create new versions of all the pages of your sales funnel, challenge them, and test them against the control.

Next is the conventional or classic multi-page testing that enables you to know how the addition or removal of an element (testimonials, security badges, and more) impacts the conversion rate after testing.

This method enables you to maintain consistency with your audience such that implementing changes doesn’t affect the user experience.

How to Conduct A/B Testing: A Few Tips or Techniques to Consider

Conducting up A/B testing for your email marketing campaigns is relatively easy. The general rule is to select what you want to test, create two or multiple variations, watch the engagement over a period and select the winning variation.

1 – Decide on the variable you want to test

Before conducting AB testing, you need to decide on what you test. Is it the subject line? The product image?

Start by thinking about your goals and what you would like to achieve with your campaigns. Or if it is that you’re facing some issues such as low engagement that you would like to fix.

Either way, reflecting on your goals will let you know what particular metrics or elements you need to improve in your emails or whatever marketing material you want to AB test.

2 – Know how to make sense of the data

Suppose you are A/B testing your email campaigns with the goal of improving your email engagement. Part of the email elements you can AB test is the subject line. So, you create different versions of your subject lines for A/B testing.

Given that you are A/B testing your email subject line, the metric you need to track is the open rate.

That’s because the open rate allows you to know which of the subject lines you’re testing appeals more to your subscribers. The same thing applies to testing product images.

You will know the variation which design template was more successful through the click-through rates.

Identifying the metric you need to track to make sense of the A-B testing campaign is mandatory to collecting meaningful data and making good decisions.

3 – Picking the right sample size

So, you need to select the part of your audience you would like to conduct the test on. If you have over a thousand subscribers, it is best advised to stick to the 80/20 rule (the Pareto principle). This rule states that you have to focus on the 20% to bring you 80% of the results.

When applying this to the AB testing, it means you have to send a variant to 10% of your subscribers, and the other variant to another 10%. Upon the result, the best variant goes to the remaining 80% group of subscribers.

Applying this rule to a bigger email list enables you to have more statistics and accurate results. The 10% sample size must have enough subscribers to realize the version with the better result.

However, if you are working with a smaller list (less than a thousand subscribers), you will need to AB test the variables on more subscribers to get a significant result.

After all, if 13 individuals click on email A and 15 individuals click on email B, you may find it difficult to make a decision.

Hence, make your sample size large enough to get a good result.

4 – Time your A-B testing campaign

You need to define a testing period for which you will be conducting the A/B testing campaign. The winning version is selected after the testing period is over. Ensure that your test runs for a long enough period of time to obtain substantial results.

The difference between the two variations will not be visible if the two are not statistically significant and that is why you need a long enough time period to collect substantial data.

So, how long is long enough? The time it takes to get statistically significant results depends on how you set up your AB test and how you perform it. It could take hours … or days … or weeks.

A lot depends on how big your subscriber base is or how much traffic you get. Keep in mind that the lesser subscribers or traffic you have the more time you’ll need to get results from your AB testing.

5 – Test one element at a time and both variations simultaneously

Imagine you send two emails at the same time, with each having similar contents and the sender’s name. But what makes the difference is the subject line.

After a few hours of testing, you see that Version B has more open rates.

The only reason you are convinced about Version B is due to the subject line. If you had a different sender’s name and a different subject line, it will be a bit difficult to decipher the element that contributed for the most impact.

What this means is that you need to test one element at a time to see the analysis and conclude better.

Three AB Testing Use Cases

1 – Hubspot

Getting reviews from your customers seems like an arduous task.

Perhaps, that is why HubSpot decided to AB test different ways to get reviews from their customers. How do they do this? Email vs in-app notifications.

HubSpot sent two email versions of the same message and an in-app notification to users notifying them they would get a $10 gift card if they gave a review on the Capterra site. The first variant consists of plain email texts to users.

Source: Hubspot

The second variant was a template email that included a certification.

Source: Hubspot

Meanwhile, the third variant was an in-app notification.

Source: Hubspot

After the testing, the result revealed that users overlooked the in-app notification more than the email.

At least, about 24.9% of individuals opened the first email and gave a review while 10.3% of individuals opened the in-app notifications.

2 – Unbounce’s

On most landing pages, website marketers often ask their visitors for an email address to keep them updated on their offers.

However, Unbounce carried out a test to determine whether users will give their email addresses or promote the company’s product on Twitter.

Both options are beneficial to the company. Asking for an email implies building a list of potential customers.

Meanwhile, asking your audience to tweet about your product could expose the brand to more people.

In the A-B test, the “control” version, the original landing page, requested that users tweet about the company’s product in exchange for their eBook.

Source: Unbounce

The variant version, which is also a landing page, requested that users provide their email addresses in exchange for an ebook.

Source: Unbounce

At the end of the test, the users ended up giving their email addresses. Unbounce witnessed a 24% conversion growth of their email landing page.

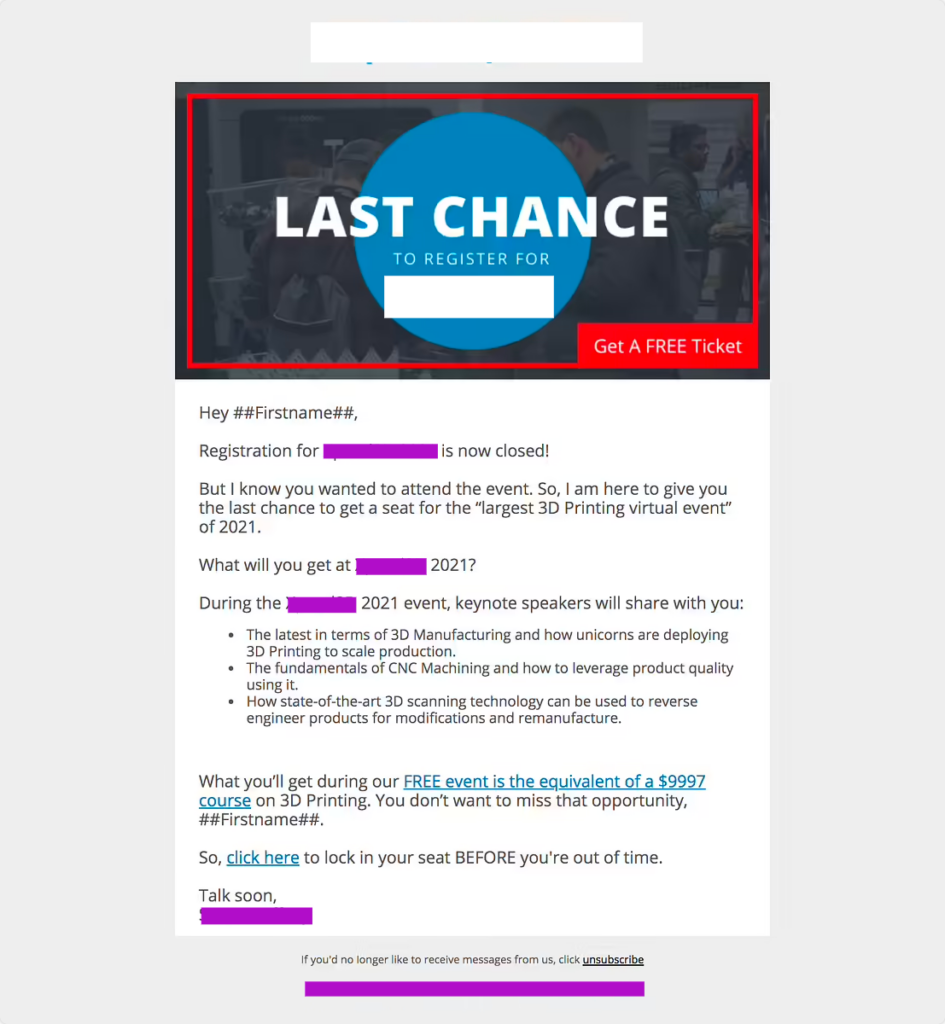

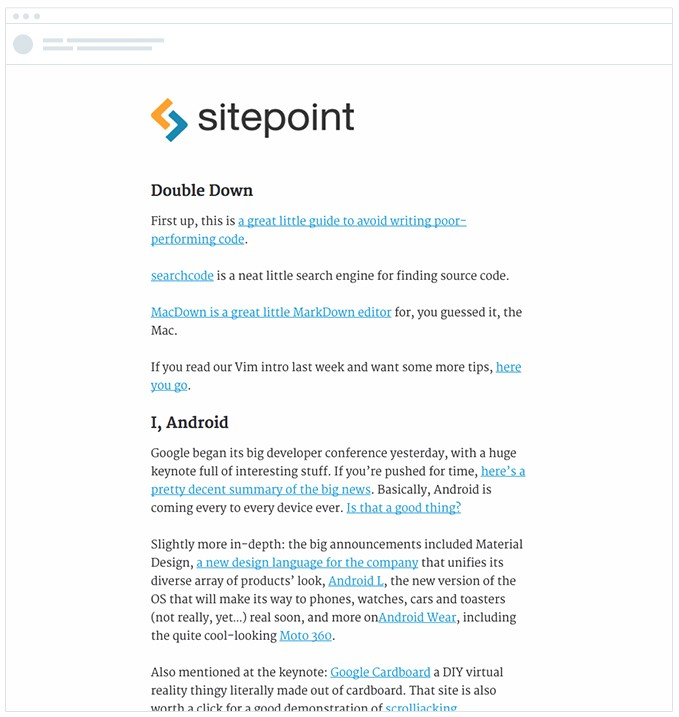

3 – SitePoint

Sitepoint is another platform that performs a-b testing on its email marketing. It tested a series of elements including the header styles to determine the one that will perform better.

They were a little bit unsure of what the header should look like. Should it have a logo and header image? Or will only a logo suffice?

The only way they got an answer was through A-B testing. While variant A had the header image and logo, variant B had only the logo.

At the end of the test, the variant with only the logo won and increased conversion by 13%.

Key Takeaways

- Always AB test your email campaigns. It helps you to draw better conclusions backed up by facts and statistical analysis.

- When split testing elements in your marketing materials or email copy, always test only one element of your email copy at a time, but always test both variables at the same time. It helps to prevent confusion and help you to determine better results.

- Understand each type of a-b testing and select the most appropriate one when based on what you need for your future tests.